John Sohrawardi, Rochester Institute of Technology and Matthew Wright, Rochester Institute of Technology

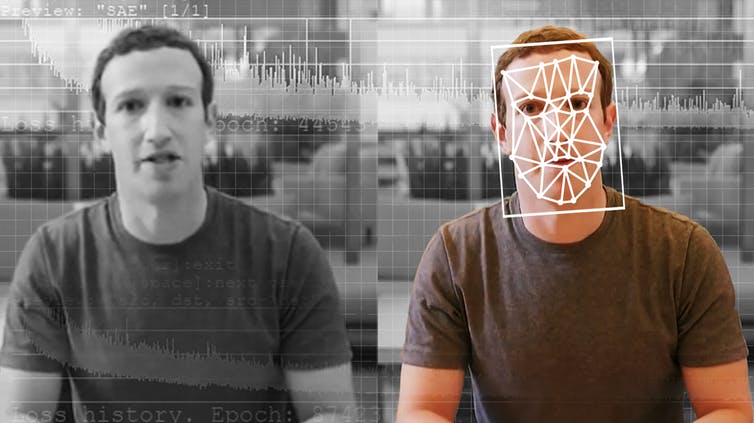

An investigative journalist receives a video from an nameless whistleblower. It reveals a candidate for president admitting to criminality. But is that this video actual? If so, it might be big information – the scoop of a lifetime – and will utterly flip round the upcoming elections. But the journalist runs the video by way of a specialised software, which tells her that the video isn’t what it appears. In truth, it’s a “deepfake,” a video made utilizing synthetic intelligence with deep learning.

Journalists throughout the world might quickly be utilizing a software like this. In a few years, a software like this might even be utilized by everybody to root out faux content material of their social media feeds.

As researchers who have been studying deepfake detection and growing a software for journalists, we see a future for these instruments. They received’t clear up all our issues, although, and they are going to be only one half of the arsenal in the broader combat in opposition to disinformation.

The drawback with deepfakes

Most individuals know that you would be able to’t consider all the pieces you see. Over the final couple of many years, savvy information customers have gotten used to seeing photographs manipulated with photo-editing software program. Videos, although, are one other story. Hollywood administrators can spend hundreds of thousands of {dollars} on particular results to make up a real looking scene. But utilizing deepfakes, amateurs with a few thousand {dollars} of laptop gear and a few weeks to spend might make one thing virtually as true to life.

Deepfakes make it attainable to place individuals into film scenes they have been by no means in – think Tom Cruise playing Iron Man – which makes for entertaining movies. Unfortunately, it additionally makes it attainable to create pornography without the consent of the individuals depicted. So far, these individuals, practically all ladies, are the largest victims when deepfake know-how is misused.

Deepfakes may also be used to create movies of political leaders saying issues they by no means mentioned. The Belgian Socialist Party launched a low-quality nondeepfake however nonetheless phony video of President Trump insulting Belgium, which bought sufficient of a response to indicate the potential dangers of higher-quality deepfakes.

Perhaps scariest of all, they can be utilized to create doubt about the content of real videos, by suggesting that they may very well be deepfakes.

Given these dangers, it might be extraordinarily useful to have the ability to detect deepfakes and label them clearly. This would make sure that faux movies don’t idiot the public, and that actual movies will be acquired as genuine.

Spotting fakes

Deepfake detection as a discipline of analysis was begun a little over three years ago. Early work centered on detecting seen issues in the movies, reminiscent of deepfakes that didn’t blink. With time, nevertheless, the fakes have gotten better at mimicking actual movies and turn out to be more durable to identify for each individuals and detection instruments.

There are two main classes of deepfake detection analysis. The first entails looking at the behavior of people in the movies. Suppose you have got a lot of video of somebody well-known, reminiscent of President Obama. Artificial intelligence can use this video to be taught his patterns, from his hand gestures to his pauses in speech. It can then watch a deepfake of him and see the place it doesn’t match these patterns. This strategy has the benefit of presumably working even when the video high quality itself is basically good.

Other researchers, including our team, have been centered on differences that all deepfakes have in comparison with actual movies. Deepfake movies are typically created by merging individually generated frames to kind movies. Taking that into consideration, our crew’s strategies extract the important information from the faces in particular person frames of a video after which observe them by way of units of concurrent frames. This permits us to detect inconsistencies in the move of the data from one body to a different. We use a related strategy for our faux audio detection system as nicely.

These refined particulars are onerous for individuals to see, however present how deepfakes are not fairly perfect yet. Detectors like these can work for any particular person, not simply a few world leaders. In the finish, it could be that each sorts of deepfake detectors can be wanted.

Recent detection methods carry out very nicely on movies particularly gathered for evaluating the instruments. Unfortunately, even the greatest fashions do poorly on videos found online. Improving these instruments to be extra sturdy and helpful is the key subsequent step.

[Get facts about coronavirus and the latest research. Sign up for The Conversation’s newsletter.]

Who ought to use deepfake detectors?

Ideally, a deepfake verification software needs to be obtainable to everybody. However, this know-how is in the early levels of improvement. Researchers want to enhance the instruments and shield them in opposition to hackers earlier than releasing them broadly.

At the identical time, although, the instruments to make deepfakes are obtainable to anyone who needs to idiot the public. Sitting on the sidelines shouldn’t be an choice. For our crew, the proper steadiness was to work with journalists, as a result of they are the first line of protection in opposition to the unfold of misinformation.

Before publishing tales, journalists have to confirm the data. They have already got tried-and-true strategies, like checking with sources and getting multiple particular person to confirm key info. So by placing the software into their fingers, we give them extra data, and we all know that they won’t depend on the know-how alone, on condition that it may possibly make errors.

Can the detectors win the arms race?

It is encouraging to see groups from Facebook and Microsoft investing in know-how to know and detect deepfakes. This discipline wants extra analysis to maintain up with the velocity of advances in deepfake know-how.

Journalists and the social media platforms additionally want to determine how greatest to warn individuals about deepfakes once they are detected. Research has proven that people remember the lie, however not the incontrovertible fact that it was a lie. Will the identical be true for faux movies? Simply placing “Deepfake” in the title won’t be sufficient to counter some varieties of disinformation.

Deepfakes are right here to remain. Managing disinformation and defending the public can be more difficult than ever as synthetic intelligence will get extra highly effective. We are half of a rising analysis group that’s taking over this risk, by which detection is simply the first step.

John Sohrawardi, Doctoral Student in Computing and Informational Sciences, Rochester Institute of Technology and Matthew Wright, Professor of Computing Security, Rochester Institute of Technology

This article is republished from The Conversation beneath a Creative Commons license. Read the original article.