Richard Werner, University of Winchester

Fifty years ago, a war broke out in the Middle East which resulted in a global oil embargo and a dramatic spike in energy prices.

The war, between Israel and an Arab coalition led by Egypt and Syria, began on October 6 1973 – the Jewish holy day of Yom Kippur. The oil embargo, announced 11 days later by the Organisation of Petroleum Exporting Countries (Opec) under the leadership of Saudi Arabia, was followed by a major hike in the price of a barrel of oil at the end of December 1973.

Many historical accounts suggest the decade of global inflation and recession that characterises the 1970s stemmed from this “oil shock”. But this narrative is misleading – and half a century later, in the midst of strikingly similar global conditions, needs revisiting.

In fact, inflation around the world had already been picking up well before the war (which lasted less than three weeks). The Federal Republic of Germany, Europe’s largest economy and biggest energy consumer, experienced its highest inflation rates of the decade throughout 1973 – first peaking at 7.8% in June that year, before the war and any hint of an oil price increase.

So what was already driving inflation around the world at that time? A clue can be found in a 2002 paper written by MIT professor Athanasios Orphanides while he was on the board of the US Federal Reserve (America’s central bank, also known as the Fed). He wrote:

With the exception of the Great Depression of the 1930s, the Great Inflation of the 1970s is generally viewed as the most dramatic failure of macroeconomic policy in the United States since the founding of the Federal Reserve … Judging from the dismal outcomes of the decade – especially the rising and volatile rates of inflation and unemployment – it is hard to deny that policy was in some way flawed.

In reality, central bank decision-makers led by the Fed were largely responsible for the Great Inflation of the 1970s. They adopted “easy money” policies in order to finance massive national budget deficits. Yet this inflationary behaviour went unnoticed by most observers amid discussions of conflict, rising energy prices, unemployment and many other challenges.

Most worryingly, despite these failings, the world’s central banks were able to continue unchecked on a path towards the unprecedented powers they now hold. Indeed, the painful 1970s and subsequent financial crises have been repeatedly used as arguments for even greater independence, and less oversight, of the world’s central banking activities.

This article is part of Conversation Insights

The Insights team generates long-form journalism derived from interdisciplinary research. The team is working with academics from different backgrounds who have been engaged in projects aimed at tackling societal and scientific challenges.

All the while, central bank leaders have repeated the mantra that their “number one job” is to achieve price stability by keeping inflation low and stable. Unfortunately, as we continue to experience both punishing inflation rates and high interest rates, the evidence is all around us that they have failed in this job.

The latest crisis – beginning with the sudden closure of Silicon Valley Bank (SVB) in California – is a further indication that inflation, far from being brought to heel by the central banks, is causing chaos in the financial markets. Inflation pushes up interest rates, which in turn reduces the market value of bank assets such as bonds. With SVB’s many corporate depositors not covered by deposit insurance and fearing regulatory intervention, a catastrophic run on this solvent bank was triggered.

When the establishment of the Fed was proposed more than a century ago, it was sold to Congress as the solution to this vulnerability in retail banking, as it could lend to solvent banks facing a run. In the event, the Fed did not lend to some 10,000 banks in the 1930s, letting them fail, and this time around it did not lend to SVB until it was closed and taken over.

Now more than ever, I believe the role of central banks in our economies and societies demands greater scrutiny. This is a story of how they have become so powerful, and why it should concern us all.

Myth busting 1: it’s not about the war

In early 2023, the global financial picture feels disconcertingly similar to 50 years ago. Inflation and the cost of living have both risen steeply, and a war and related energy supply problems have been widely labelled as a key reason for this pain. In autumn 2022, inflation reached double-digits in the UK and across the eurozone. Italy recorded 12.6% annual inflation while some countries, including Estonia, saw inflation go as high as 25%.

In their public statements, central bank leaders have blamed this on a long (and movable) list of factors – most prominently, Vladimir Putin’s decision to send Russian troops to fight against Ukrainian armed forces. Anything, indeed, but central bank policy.

In October 2022, the president of the European Central Bank, Christine Lagarde, claimed in an interview on Irish TV that inflation had “pretty much come about from nowhere”. Yet when we chart these inflationary patterns around the world, we find a similar puzzle to that seen 50 years ago.

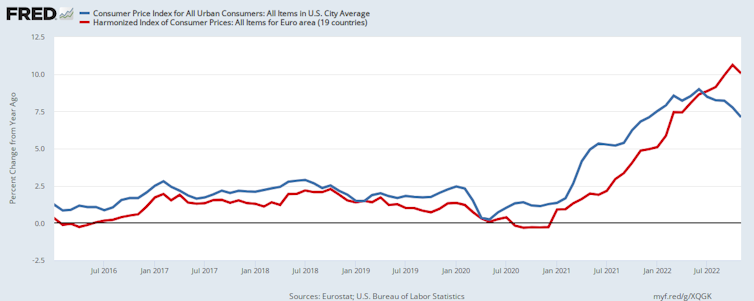

Russia commenced its military operations in Ukraine on February 24 2022. An EU ban on crude oil and refined petroleum products from Russia came into effect in December 2022, while Russian gas imports have never been banned by the EU (although they were discouraged politically). Yet as Figure 1 shows, inflation had already been increasing in the US and Europe long before Putin gave the order to move his troops across the border – indeed, as far back as 2020.

Figure 1: inflation rates 2016-2022, US and eurozone

By February 2022 (the month of Russia’s invasion), the UK’s 12-month Consumer Price Index inflation rate was already at 6.2%. At the same time, inflation was at 5.2% in Germany and 7.9% in the US.

But what about another “blue moon” event: COVID? The declaration of a pandemic by the World Health Organization on March 11 2020 led to the shutting down of many economies and unprecedented restrictions on people’s movement. And the responses of central banks and governments to this unprecedented situation did add to the inflationary conditions that were already being created by the unwise (and coordinated) actions of the world’s central banks. But again, these pandemic responses were not the root cause.

To understand the real roots of our current inflation crisis, we must instead address another widely-held misconception: how money is created.

Myth busting 2: where money really comes from

The process by which banks create money is so simple that the mind is repelled. When something so important is involved, a deeper mystery seems only decent.

This perceptive insight by the American economist J.K. Galbraith in 1975 was supported some 35 years later when, along with my Goethe University students, I conducted a survey of more than 1,000 passers-by in central Frankfurt. We found that more than 80% of those interviewed believed that most of the world’s money is created and allocated by either governments or central banks. An understandable view, but wrong.

In fact, my empirical studies of our global monetary system have demonstrated that it is high-street or retail banks that produce the vast majority – around 97% – of the world’s money supply. Every time a bank grants a loan, it is creating new money that is added to the economy’s overall money supply.

In contrast, governments don’t create any money these days. The last time the US government issued money was in 1963, until President John F Kennedy’s assassination that year. The UK government stopped issuing money in 1927 and Germany even earlier, around 1910. Central banks, meanwhile, only create around 3% of the world’s money supply.

For growth to occur, more transactions need to take place this year than last. This can only happen if the supply of money available for these transactions increases – in other words, if retail banks issue more loans. If used correctly, it can be a powerful tool for increasing growth and productivity. This was the basis of my proposal to help Japan’s flatlining economy in the 1990s, which would later become widely known as “quantitative easing”, or QE.

However, such a strategy carries risks too – in particular, the potential for creating inflation, if this new money is used at the wrong time or for the wrong purposes.

The birth of quantitative easing

In 1995, while chief economist at Jardine Fleming Securities in Tokyo, I published an article in Japan’s leading financial newspaper, the Nikkei, entitled How to Create a Recovery through “Quantitative Monetary Easing”. It proposed a new monetary policy for the Bank of Japan that could stave off the country’s looming banking crisis and economic depression.

The core of the idea was increasing Japan’s total transactions in the economy by increasing the supply of money for the nation’s “real economy”. This could be achieved by encouraging retail banks to issue more loans to companies for investment, thus stimulating economic recovery.

However, QE’s subsequent depiction as a form of “magic money tree” is misplaced. In my 1997 paper and subsequent book, I stressed the difference between newly-created money when it is used for productive purposes – in other words, for business investment that creates new goods and services or increases productivity – and when it is used for unproductive purposes such as financial asset and real estate transactions. These merely transfer ownership from one party to another without adding to the nation’s income.

If new bank credit is used for productive business investments such as loans to small firms, there will be job creation and sustainable economic growth without inflation. Furthermore, this growth – if pumped into the economy via many small retail banks to even more small firms – would have the additional benefit of leading to more equitable wealth distribution for all.

By contrast, if new credit is used for unproductive purposes such as trading financial assets (including bonds, shares and futures) or real estate, this leads to asset price inflation, a form of economic bubble which can trigger a banking crisis if the boom is large enough. Similarly, if bank credit is created chiefly to support household consumption, this will inevitably result in consumer price inflation.

Unfortunately, in the UK and many other countries – especially those with only a few, very large retail banks – there has been a significant shift of bank credit away from lending for productive business investment to lending for asset purchases. As big banks want to do big deals, bank lending for asset purchases now accounts for the vast majority of lending (75% or more, according to my analysis of Bank of England data).

In contrast, just before the first world war, when there were many more small banks in the UK, more than 80% of bank lending was for productive business investment. This decline in bank lending for business investment has had many consequences, including falling economic growth and lower productivity in the UK. Other countries such as Germany that have maintained a system of many small, local retail banks have seen better levels of productivity as a result.

Returning to the situation in Japan, having initially resisted my proposal, in March 2001 the Bank of Japan announced the introduction of the world’s first programme of QE. Unfortunately, it did not follow the policy I had recommended. Its approach – buying well-performing assets such as government bonds from retail banks – had no effect on the Japanese economy, because it did not improve retail banks’ willingness to lend to businesses. In other words, no new money was being created for productive purposes.

Nonetheless, over the two decades since, QE has become a monetary policy beloved of central banks across the world as they have sought to keep their economies looking strong in the face of serious economic challenges. The next major global test of this policy was the 2007-08 global financial crisis and associated “Great Recession”.

How the Fed tackled the 2008 financial crisis

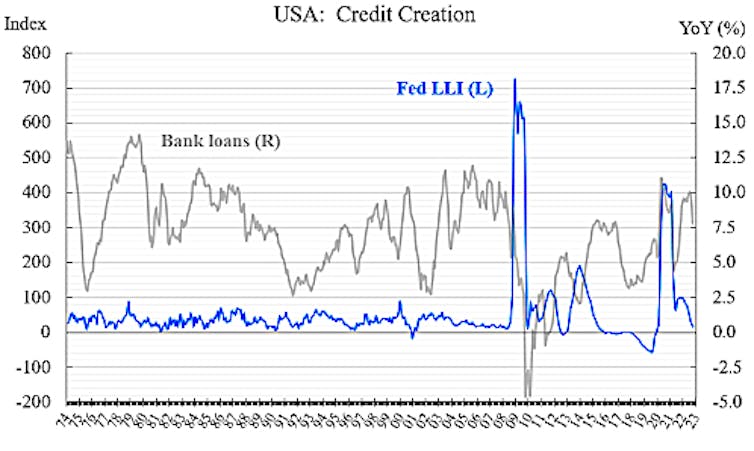

The Great Recession began with the freezing of the interbank market, as overextended retail banks (following years of lax oversight of their property lending) became doubtful about each other’s solvency. This was exacerbated by the decision of the Fed in September 2008 not to rescue or merge Lehman Brothers, but instead let it go bankrupt. The resulting, dramatic collapse in US retail bank lending (the grey line in Figure 2) ensured that the US housing bubble burst, causing economic shockwaves across the world.

Figure 2: credit creation by Fed and US retail banks, 1974-2023

The freezing of the interbank market threatened the world’s banking system and demanded urgent action by the Fed and other central banks. In response, the Fed – under its then-chairman Ben Bernanke, who had previously been involved in the discussions on how to revive the flagging Japanese economy in the 1990s – partly adopted the “true” type of QE that I had earlier proposed for Japan. This meant it took around 18 months for US retail banks to boost their lending again, causing a recovery after a further six months.

The Fed’s version of QE was to follow my recommendation to purchase non-performing assets from banks – in other words, it purchased their bad debts, thus cleaning up their balance sheets. This did not inject any new money into the US economy and so did not create inflationary pressures. But it helped the retail banks – those that had not failed, at least – get off their knees and be ready to do normal business again, thus ending the credit crunch after the surge in defaulted loans.

As a result, US retail banks were issuing new loans by 2010 – earlier than in other countries where central banks did not adopt this strategy, but instead copied the failed Bank of Japan version of QE. We can see this in Figure 2, above, in the surge of the blue line (the Fed Leading Liquidity Index) in 2009, and the subsequent recovery of the grey line (retail bank loans) in 2010, thanks to which the US recovered first among major economies from the 2008 crisis.

When banking experts surveyed this vast QE programme undertaken by the Fed in late 2008 and subsequently, many feared it would lead to the return of inflation. It did not – principally because retail bank credit creation had contracted hugely as the interbank market had imploded (the grey line in Figure 2), and because the Fed adopted the aspect of QE that did not increase the money supply via new bank loans.

So, the Fed’s use of QE to bring the US economy “back to life” was regarded as a relative success. Instead, the global media reserved most of its criticism on the damage done to economies by “greedy” retail banks.

This meant that quietly, following this global financial disaster, the central banks were able to further increase their powers again, in the name of greater scrutiny of the financial sector. The European Central Bank was particularly successful in expanding its powers over the following decade.

At the same time, QE was seen by some as an apparent “miracle cure” for future financial crises. This came to a head in March 2020, when the central bankers began a programme of QE that is at the root of many of our current economic and societal difficulties.

The real cause of our current inflationary crisis

In May 2020, as I conducted my latest monthly analysis of the quantity of credit creation across 40 countries, I was startled to find that something extraordinary had been happening since March that year. The major central banks across the globe were boosting the money supply dramatically through a coordinated programme of QE.

This was the version of QE that I had recommended as the second policy step in Japan in the 1990s – namely, for the central bank to purchase assets from outside the banking sector. As these payments forced retail banks to create new credit in a massive burst of money supply not previously seen in the post-war era, firms and non-bank financial institutions that had sold to the Fed gained new purchasing power as a result.

Even the Bank of Japan, having previously argued for two decades that it could not possibly purchase assets from anyone other than banks, suddenly engaged in this unusual operation at the same time as other central banks, and on a massive scale.

The reasons for this coordinated policy are not immediately apparent, although there is some evidence that it was sparked by a proposal presented to central bankers by the multinational investment company Blackrock at the annual meeting of central bankers and other financial decision-makers in Jackson Hole, Wyoming in August 2019. Soon after this, difficulties in the Fed’s repurchase agreement (“repo”) market in September 2019, triggered by private banking giant JP Morgan, may have made up their minds.

Apparently agreeing with my critique that pure fiscal policy does not result in economic growth unless it is backed by credit creation, Blackrock had argued at Jackson Hole that the “next downturn” would require central banks to create new money and find “ways to get central bank money directly in the hands of public and private sector spenders” – what they called “going direct”, bypassing the retail banks. The Fed knew this would create inflation, as Blackrock later confirmed in a paper which stated that “the Fed is now committing to push inflation above target for some time”.

This is precisely what was implemented in March 2020. We know this both from available data and because the Fed, largely without precedent, hired a private-sector firm to help it buy assets – none other than Blackrock.

Having “cried wolf” about the inflationary risk of introducing QE in 2008, and following more than a decade of resolutely low global inflation, many banking and economic experts thought the Fed’s and other central banks’ similarly aggressive credit creation policy in 2020 would not be inflationary, again.

However, this time the economic conditions were very different – there had been no recent slump in the supply of money via retail bank loans. Also, the policy differed in a crucial aspect: by “going direct”, the Fed was itself now massively expanding credit creation, the money supply and new spending.

Meanwhile the COVID measures imposed by governments also focused on bank credit creation. In parallel with unprecedented societal and business lockdowns, retail banks were instructed to increase lending to businesses with governments guaranteeing these loans. Stimulus checks were paid out to furloughed workers, and both central banks and retail banks also stepped up purchases of government bonds. So both central and commercial banks added to the supply of money, with much of it being used for general consumption rather than productive purposes (loans to businesses).

As a result, the money supply ballooned by record amounts. The US’s “broad” money supply metric, M3, increased by 19.1% in 2020, the highest annual rise on record. In the eurozone, money supply M1 grew by 15.6% in December 2020.

All of this boosted demand, while at the same time the supply of goods and services was limited by pandemic restrictions that immobilised people and shut down many small firms and affected some supply chains. It was a perfect recipe for inflation – and significant consumer price inflation duly followed around 18 months later, in late 2021 and 2022.

While it was certainly exacerbated by the COVID restrictions, it had nothing to do with Russian military actions or sanctions on Russian energy – and a lot to do with the central banks’ misuse of QE. I believe the high degree of coordination of the central banks in adopting this QE strategy, and the empirical link with our current period of inflation, means their policies should be given more of a public airing. But the subsequent war has muddied the waters and deflected from important underlying questions.

For example, critics of the unprecedented levels of national debt in the world – with the US alone now owing more than US$31 trillion – have long been warning that the likely way out for countries that have become “addicted to easy money” is an inflationary road that silently erodes the value of this debt. But at what cost for the general public?

Meanwhile, the concentration of powers among central banks and a few favoured advisers, such as Blackrock, has led to widespread questions about the way the global economy is controlled by a few key figures. And the recent emergence of a new form of digital currency is another potentially significant chapter in this story of central bank dominance.

A new tool to increase central bank control?

At the same time as the UK government imposed the first lockdown in March 2020, the Bank of England (BoE) issued its first major discussion paper (and held a first public seminar) about its perceived need to introduce a central bank digital currency. (It is noticeable how many central banks seemed spurred on in their plans for digital currencies by the COVID digital vaccination passport concepts that were advanced during the pandemic.)

Three years later and the BoE has published a consultation paper in conjunction with the UK Treasury that sets out “the case for a retail central bank digital currency”. The paper explained that:

The digital pound would be a new form of sterling … issued by the Bank of England. It would be used by households and businesses for their everyday payments needs. It would be used in-store, online and to make payments to family and friends.

While the consultation lasts until June 7 2023, we are already being informed that a state-backed UK digital pound is likely to be launched “later this decade” – possibly as soon as 2025.

In fact, digital currencies have been used for decades – the bank type. However, as the name suggests, a central bank digital currency (CBDC) – if widely adopted – would shift control of our money supply irrevocably away from the decentralised system we have, based on retail banks, in favour of the central banks.

In other words, the “umpires of the game” are preparing to step into the arena and offer current accounts to the general public, competing directly against the retail banks they are supposed to regulate – a clear conflict of interest. From the US to Japan, central banks – already more powerful and independent than ever before – have expressed their desire to create and control their own CBDCs, potentially using technology similar to cryptocurrencies such as Bitcoin. In my view, this poses many risks for the way economies and societies function.

Unlike the unregulated cryptocurrencies, CBDCs would come with the full backing and authority of the central banks. In any future financial crisis, retail banks could struggle to withstand this unfair competition, with customers shifting their deposits to CBDCs thanks to their central bank and government support.

The conflict of interest is compounded as central banks set the policies that can make or break retail banks (see the recent failures of SVB and Signature Bank). Added to this, central banks seem to be favourably inclined towards big bank bailouts, while small banks are seen as dispensable.

Some countries – perhaps even the eurozone – could be left with a Soviet-style monobank system, where the only bank in town is the central bank. This would be disastrous: the useful functions of retail banks are to create the money supply and allocate it efficiently via thousands of loan officers on the ground across the country.

This form of productive business investment, which creates non-inflationary growth and jobs, is best achieved via lending to small and medium-sized enterprises (SMEs). Neither central banks nor cryptocurrencies fulfil these decentralised but crucial functions that are at the core of successful capitalism, from the US and Germany to Japan and China.

But the further concentration of powers into the hands of the central banks is not the only danger posed by CBDCs. Their biggest attraction for the central planners is that they facilitate “programmability” – in other words, control over how an individual is permitted to use that currency. As Agustin Carstens, general manager of the Bank for International Settlements (which is owned by the central banks), explained in 2021:

A key difference with the CBDC is that the central bank will have absolute control on the rules and regulations that will determine the use of that expression of central bank liability. And also, we will have the technology to enforce that.

Critics of a central bank might suddenly find they are not allowed to pay for anything any longer – in a manner reminiscent of the way that protesting Canadian truckers were frozen out of their funds by the Canadian government in February 2022.

Additionally, central planners could theoretically restrict purchases to a limited geographical area, or to only the “right” items in the eyes of the authorities, or only in limited amounts – for example, until you have used up your “carbon credit” budget. The much discussed idea of a “universal basic income” could serve as the carrot for people to accept a central electronic currency that could enact a Chinese-style social credit system and even, in the future, exist in the form of an electronic implant.

In contrast, no such control is possible with old-fashioned cash – now recognised by many as a beacon of freedom.

Why we should resist bank centralisation

For those who think I am being alarmist, do these possibilities seem so far-fetched if we think about some parts of present-day China, for example?

But it is also worth noting that China’s recent history is not one of unwavering centralisation – at least in economic terms. When Deng Xiaoping became its paramount leader in December 1978, he recognised that the centralisation of banking under a Soviet-style monobank was holding back the country’s economic growth.

Deng quickly switched to decentralisation by creating thousands of commercial banks over the following years – mostly small local banks that would lend to small firms, creating jobs and ensuring high productivity. This allowed the creation of 40 years of double-digit economic growth, lifting more people out of poverty than ever before.

By contrast, the UK used to have hundreds of county and country banks, but they were all bought up by the major retail banks such that a century ago, the “Big Five” banks had become dominant – and have largely remained so ever since. Over recent decades, these banks have been quick to close local branches.

The European Central Bank, meanwhile, has declared it wants to reduce the number of retail banks – to date, 5,000 have disappeared under its watch. And in the US, some 10,000 banks have disappeared since the 1970s. It is the small banks that disappear.

Our empirical study of the US banking sector showed that big banks don’t want to lend to small firms. However, most employment in the economy is with SMEs, which our study suggests will only thrive if we have a decentralised banking system with many small, local banks.

In Germany, these local community banks have survived for more than 200 years because they use the co-operative voting system of one shareholder one vote. That system of “economic democracy” prevents takeovers and hence explains why the German SMEs are by far the most successful in the world, contributing significantly to exports and Germany’s high productivity.

The absence of local banks in the UK should be a key part of any explanation of the nation’s “productivity puzzle”, although talking about it is not encouraged by the big bank cartel known as the City of London.

In my view, it is time to re-introduce local banks in the UK. This will help us build a more decentralised banking system, and hence keep at bay the dangers of excessive centralisation of the economy, including from CBDCs. For this purpose, I established the social enterprise Local First CIC, which has helped found the budding Hampshire Community Bank as a prototype.

The key focus of this bank is to help small firms in Hampshire, with all loan decisions being made on the ground by people in Hampshire, deposits being used to fund productive local lending, and most profits being returned to the people of Hampshire.

But wherever you live and whoever you bank with, I believe it is important that we resist the introduction of CBDCs, use cash as much as possible, and support our local small shops and local banks. Where there are no longer local banks, we should get together and establish new ones.

CBDCs are not a solution to a problem, but the latest goal in the multi-decade struggle by central planners for maximum powers – at the unnecessary cost of crises, inflation, economic dislocation and unemployment.

For you: more from our Insights series:

- Beyond GDP: changing how we measure progress is key to tackling a world in crisis – three leading experts

- Class and the City of London: my decade of research shows why elitism is endemic and top firms don’t really care

- The public cost of private schools: rising fees and luxury facilities raise questions about charitable status

To hear about new Insights articles, join the hundreds of thousands of people who value The Conversation’s evidence-based news. Subscribe to our newsletter.

Richard Werner, Professor of banking and economics, University of Winchester

This article is republished from The Conversation under a Creative Commons license. Read the original article.